Kallidus Learn repeat training

Company

Kallidus are a B2B SaaS provider in the HR and Learning & Development space, that aim to unleash the potential in people through the use of their recruitment, learning and performance software.

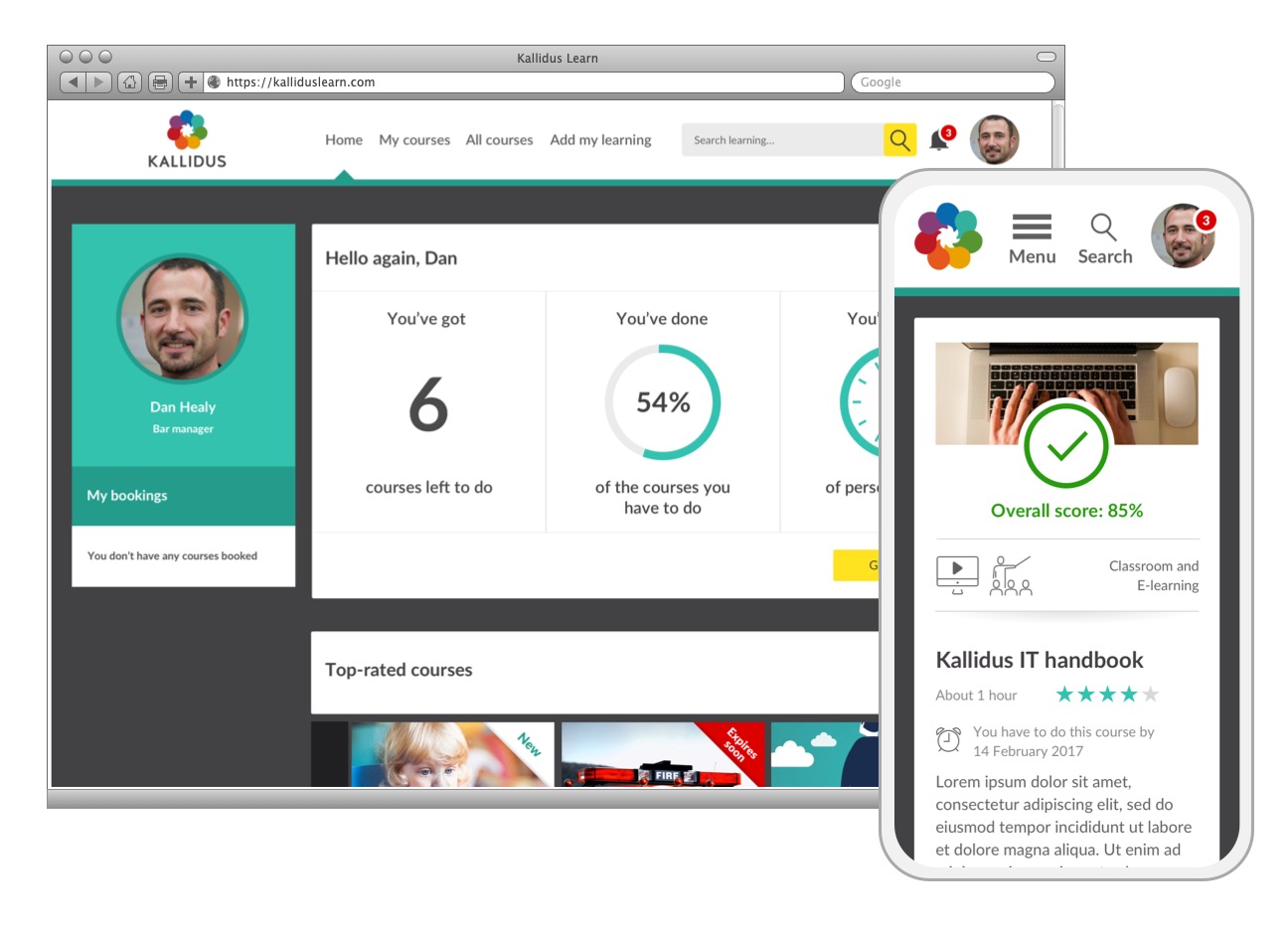

Product

Kallidus Learn is a learning management system, which is adopted by organisations to provide continual learning opportunities for their employees as well as remain compliant on mandatory training for their industry.

Kallidus Learn

My role

Lead UX designer

The team

I worked closely with the product owner and developers of the product team.

My responsibilities

- User research

- Wireframing and prototyping

- Moderated user testing

- Unmoderated qualitative and quantitive user testing

The challenge

Customer’s of Kallidus wanted their employees to remain compliant on mandatory training that required employees to be re-trained on the learning periodically to hold compliance (for example, first aid training which has to be done every 3 years). Customers wanted this to be managed through the Kallidus learning management system.

The minimum viable product of Kallidus’ learning management system did not currently have a solution to this problem.

The project goals

Allow customers to set up and configure courses that would be repeated periodically by employees. Employees should understand what is expected of them for these courses at any point in time.

Approach

There were two customer bases that I needed to satisfy in this project:

- Kallidus Learn customers (on the new SaaS product)

- Kallidus Classic LMS customers (on the old product)

Classic LMS customers already had functionality to configure repeating courses, and so since one of the company goals was to move Classic LMS customers over to Learn it was important to keep these customers in mind, and have the ability to easy migrate them across to the new system with their repeating courses intact. Learn customers did not have any of this functionality and were rapidly running out of time to get this set up in the system before their employees’ courses expired and became non-compliant.

There were also two personas involved in this work - the administrator who would need to set up the course in the system, and the employee who would need to complete the course on a regular basis. It was important to focus on both of these journeys.

Summary of steps

Admin journeys

Research

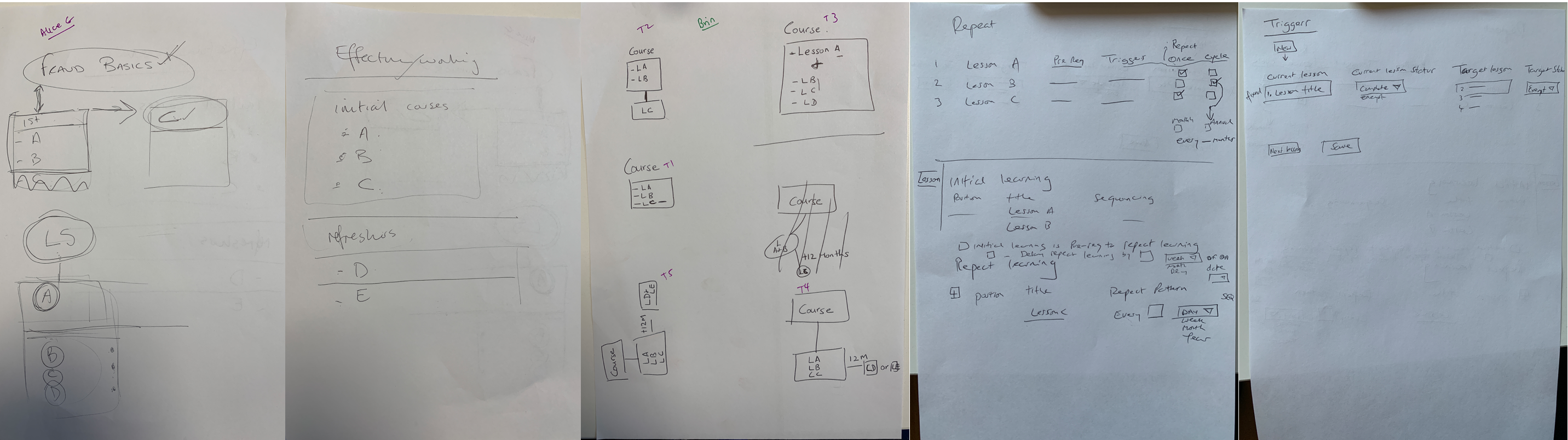

I conducted research interviews with 5 customers across the two segments to understand the problem they were having, and the user needs around repeating training.

In these interviews I posed a common example of a course that needs to be repeated periodically and initiated participatory design by proving a pen and paper and asking the participant to draw a representation of how they would expect to set up a course like the one described in the system. This helped me understand the administrators mental model around setting up courses, particularly those that need to be repeated by employees.

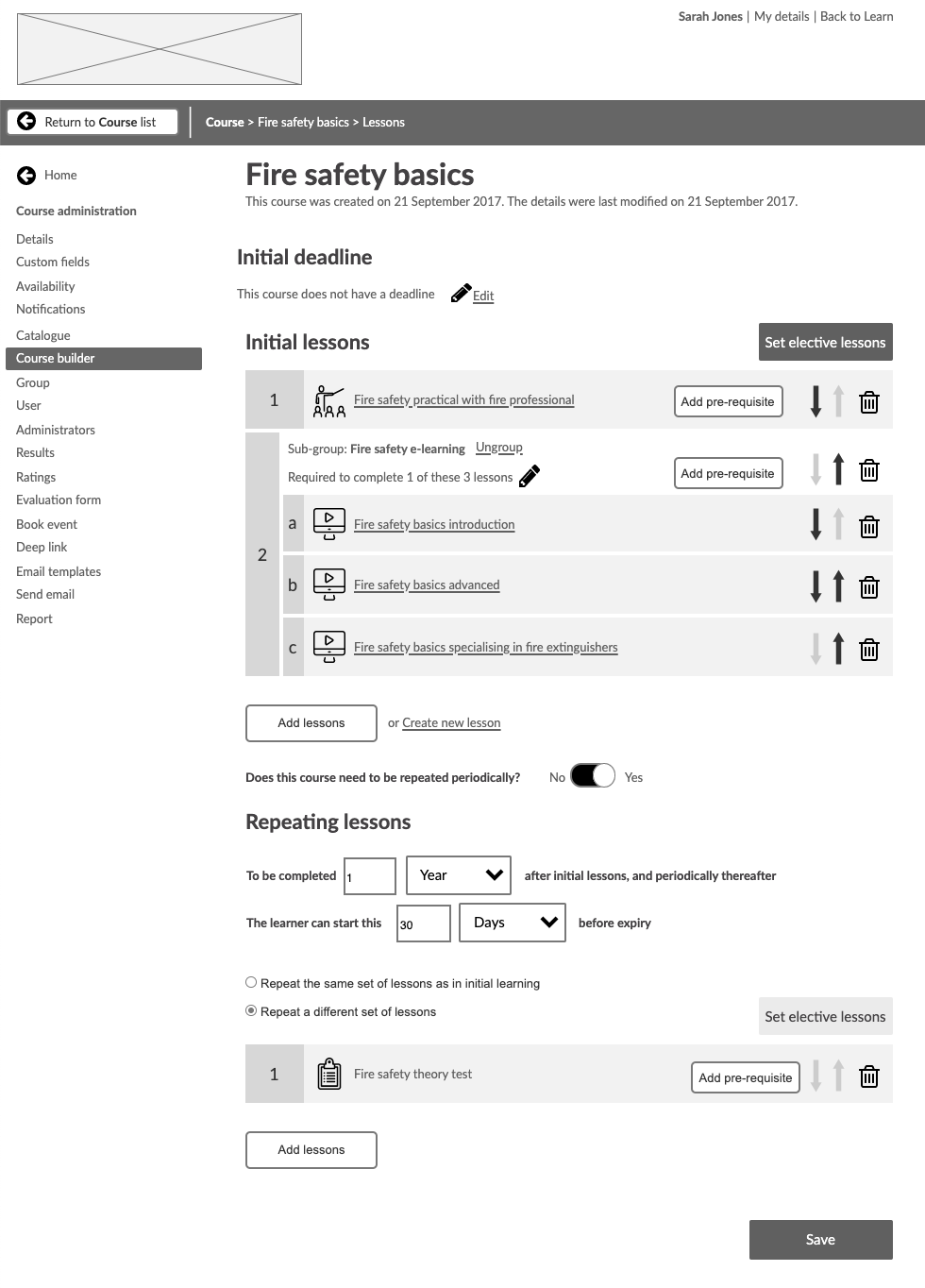

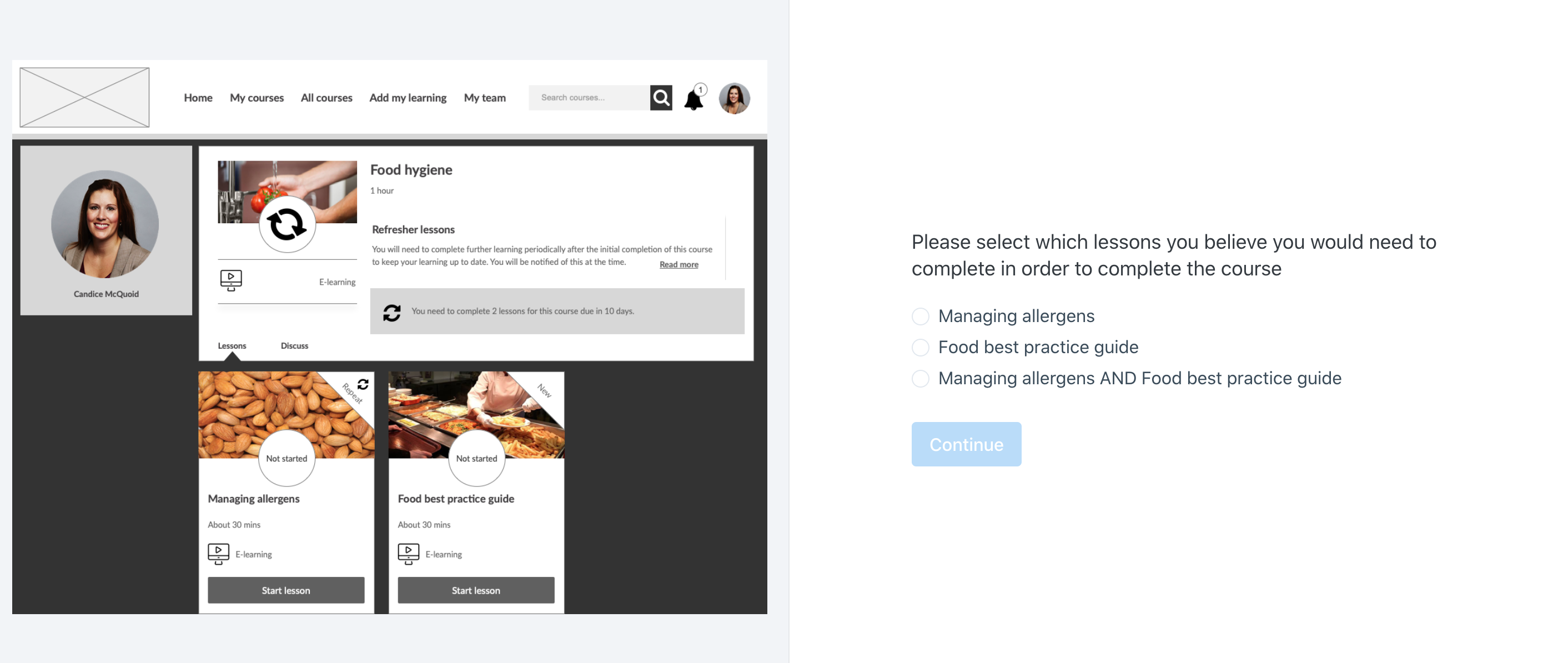

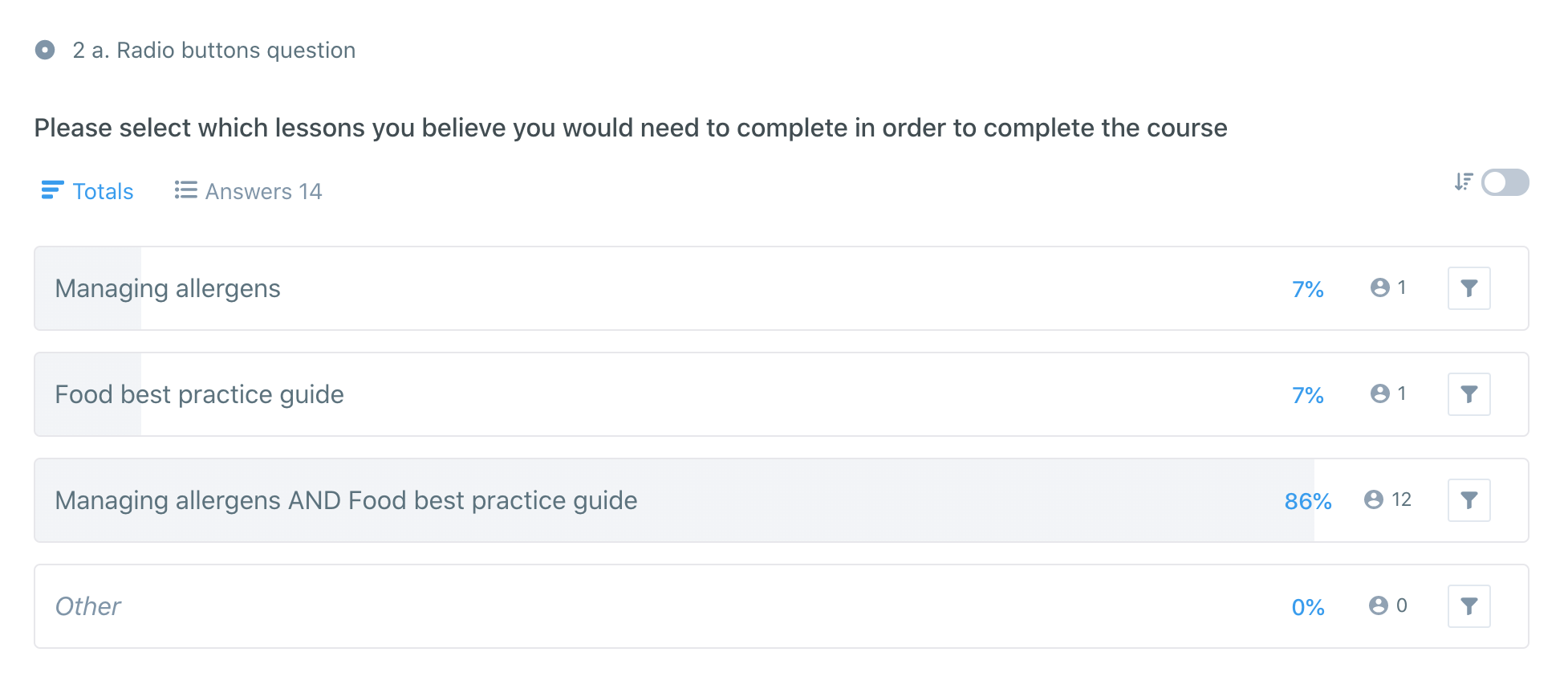

During the research it also transpired that not only was there a need to be able to get employees to repeat certain courses in the system, there was also a separate need that administrators were looking for in course configuration. This was to allow employees to select certain lessons to complete within the course i.e. they could choose out a handful of lessons which one they wanted to do, and completing any one of them would contribute to course completion.

I took this additional requirement back to the product owner and we agreed that since we were designing and testing in this area of the system that we should consider this requirement alongside it to ensure our solution was extensible in the future to accommodate this (even if we weren’t going to build it in straight away).

Design

Using the insights from the administrator research and with an understanding of the mental model of how to set up courses that repeat in the system, I started sketching designs around an admin interface for setting up and configuring repeating courses.

These sketches evolved into an interactive prototype which was used during feedback sessions with administrators.

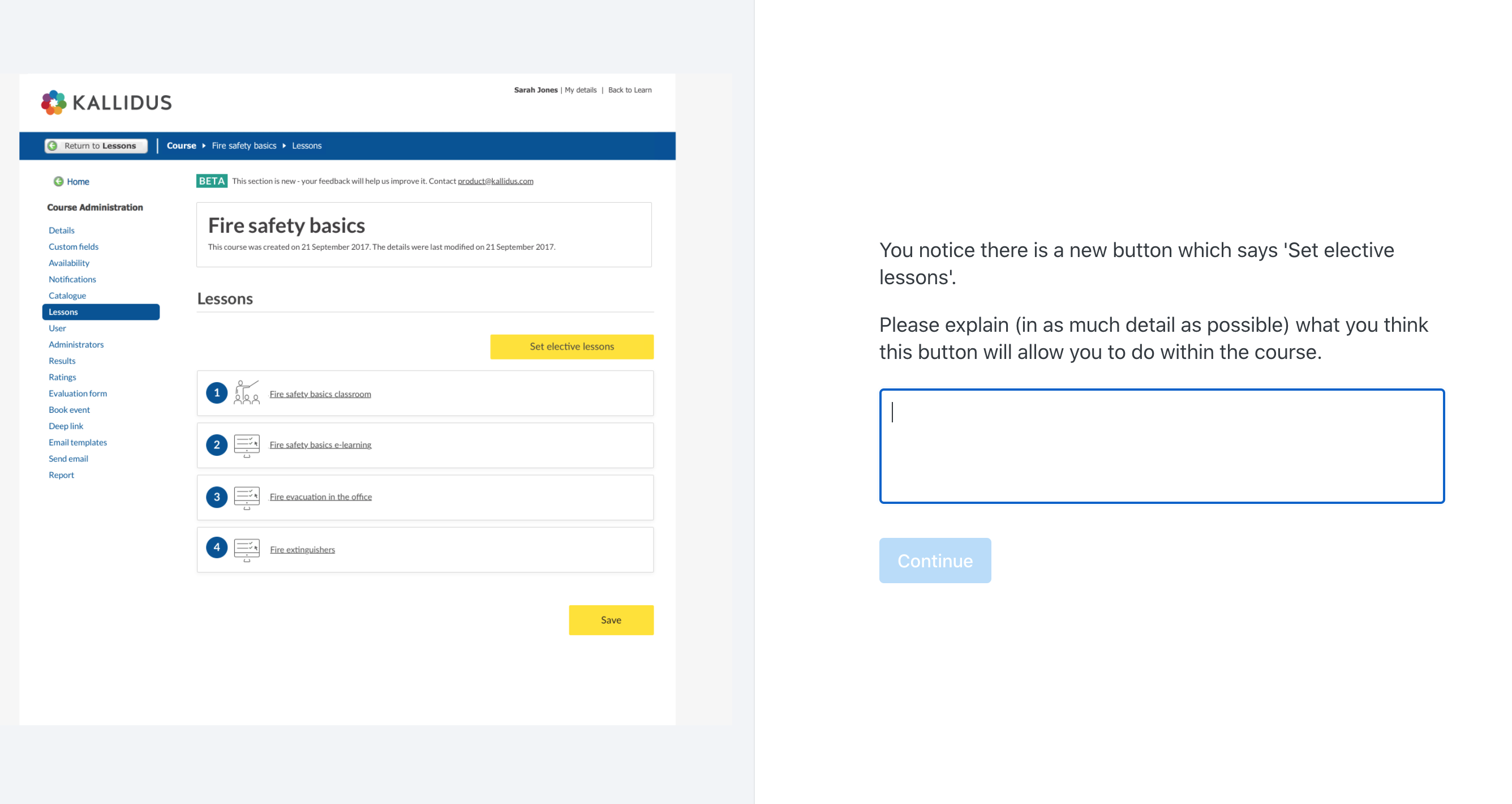

Beta testing

We beta tested our first phase of the administration screens and gathered feedback directly from those customers that took part. This was an effective method to gather feedback since it saw customers using the functionality with their real life courses rather than fake scenarios in usability testing. The feedback was positive, however some of the terminology in the interface confused users and so we took this away to refine further.

Iterating

In order to determine how best to update the terminology in the interface which was confusing our administrators I ran an unmoderated qualitative user test via Usability Hub. This allowed us to reach a wider audience of administrators via email, for administrators to complete in their own time (in approximately 2 minutes). We A/B tested some variants on the copy, all with the same test question to we could compare the scores against each other. There was a clear winner in our tests, so this copy then got transferred into the live system.

Employee journeys

Design

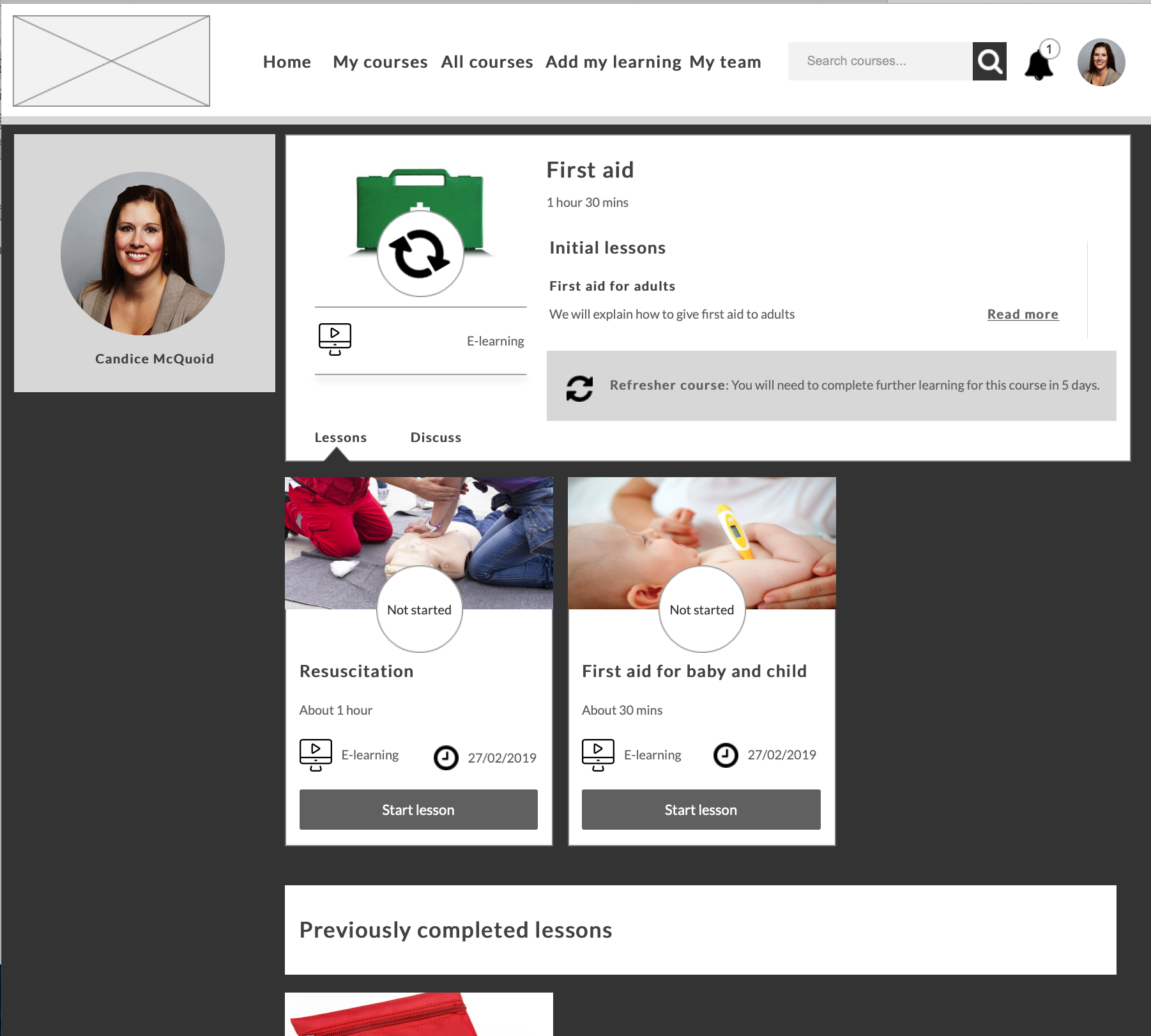

Based on the requirements captured in the admin research, I started exploring ways in which the course could be presented within the learning management system to employees, and how it would appear at different points in the courses completion - before starting, after completing the first time, when it was due to be done again, and following completion of it again.

I started with sketches and then pulled together the most scalable designs through into an Axure interactive prototype for testing.

User testing

Customers who had participated in the admin user research had offered to put forward employees within their organisation to participate in the employee usability testing. We tested with 5 participants.

Firstly I pulled together a testing script that outlined different scenarios (representing different course configurations, and also whether that employee had completed that course in the past) so that I could test each scenario and ensure we had a fully thought through design.

Using this script I then fleshed out the interactive prototype. The prototype had a story running through it that I could go through with participants that would allow them to easily relate to the questions I was asking them.

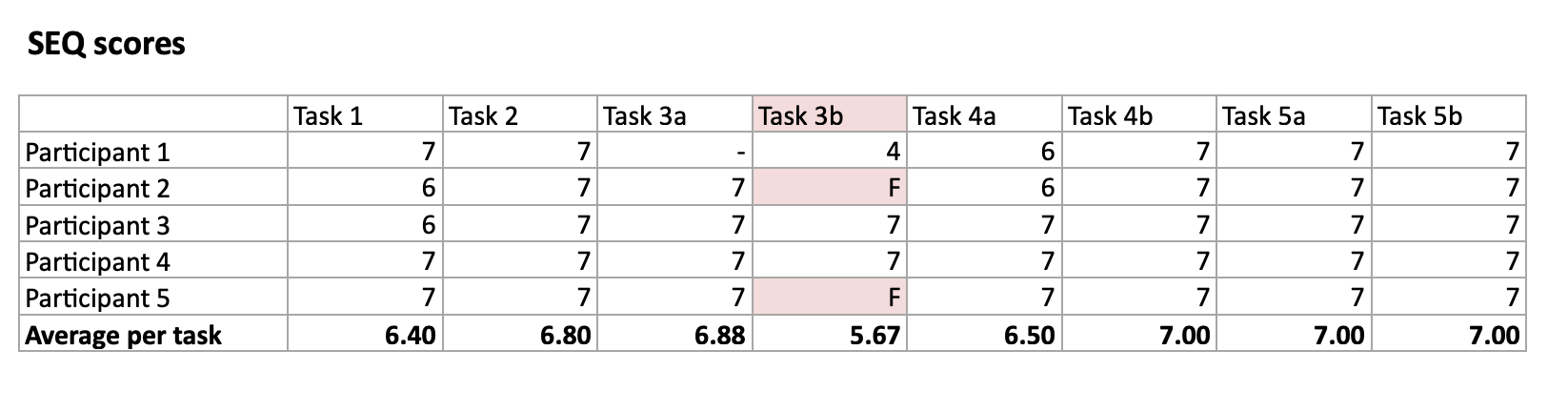

Using the Single Ease Question to understand ease of use of the prototype, I gathered scores for each task from the participants. 7 out of 8 tasks all scored great scores of 6 or 7. However one task scored lower scores, and a few participants failed to complete it successfully. It was clear that the design for that particular task needed to be revisited.

Iteration

Since there was one task that did not perform as well as the rest I focused my iteration efforts on getting the design for that task refined. I chose to retest the design through an unmoderated quantitive method in order to gather more feedback in a shorter time frame. I chose to do this using Usability Hub, where you can send out click-tests via email to participants to complete in their own time, with the results captured in Usability hub.

The results from this refined design were much better and so I now had a full set of designs I could move forward with.

Conclusion

This project has been continuing for some time with various phases being developed, with more to come.

From a UX perspective a lot of different UX methods were used and combined to quickly hone in on a solution that satisfied user needs and was easy to use for all involved. Combining both moderated and unmoderated testing and quantitive and qualitative allowed me to gather appropriate feedback at variants points in the design process.